How to build ethical AI products that inspire trust?

A 2019 story by VentureBeat claims that only 13% of AI projects make it into business production. IDC reports that a quarter of organizations report up to 50% of AI project failure. In recent times, there has been several worrying news about AI applications reported that has put AI under scrutiny again. Uber and Tesla incidents of autonomous systems have raised a question on AI trust. Amazon’s AI-powered recruiting system was gender-biased again (Source: Quartz, Oct, 10′ 2018). Several studies have found that various AI systems built are biased towards gender, race, and skin type in the business space. These business risks make AI systems vulnerable to mistrust. It is a mandate of various data privacy and regulatory authorities to ensure AI systems are sufficiently explainable to target users.

When AI is under scrutiny, the first thing we try to understand is how the AI system is built – what data is the AI trained on, what algorithms are used and how is it tested and validated. Most of the AI models are black box. The black box AI/ML model are those that essentially cannot answer questions such as:

- Why it made certain predictions that led to a certain type of decision?

- Why it avoided making other predictions for a given case?

- How to correct an error?

- When to trust AI and when not to?

In short,

- Current black box AI creates business risk.

- Black box AI creates confusion and doubts.

- AI systems are mistrusted and are not used to their full potential.

The word Explainable prefixed to AI seems to be a solution to the problem at hand. The explainable AI is a whole new concept of building transparent AI systems. Any AI system when built with a goal to achieve desired transparency and explainability, will eventually choose the right algorithms which are not black box in nature. In other words, these “transparent” algorithms shall provide required explanation to the questions above. The only trade-off one must make with this approach is model accuracy these models are able to achieve and their performance especially on large volume of data is not on par with expectations.

So, we require a system of explainable AI models that can superimpose the black box, super performing AI models to provide explainability, and transparency. It helps users evaluate the model fairness and guardrails AI models with security and controls.

How can the explainable AI model help enterprises?

- They help verify the ML models and isolate direct and indirect impacts.

- They improve ML models by leveraging user decision and actions and feedback to improve model outcomes

- They will help discover newer insights.

- They debug ML model predictions.

- They help discover reasons for specific model decisions.

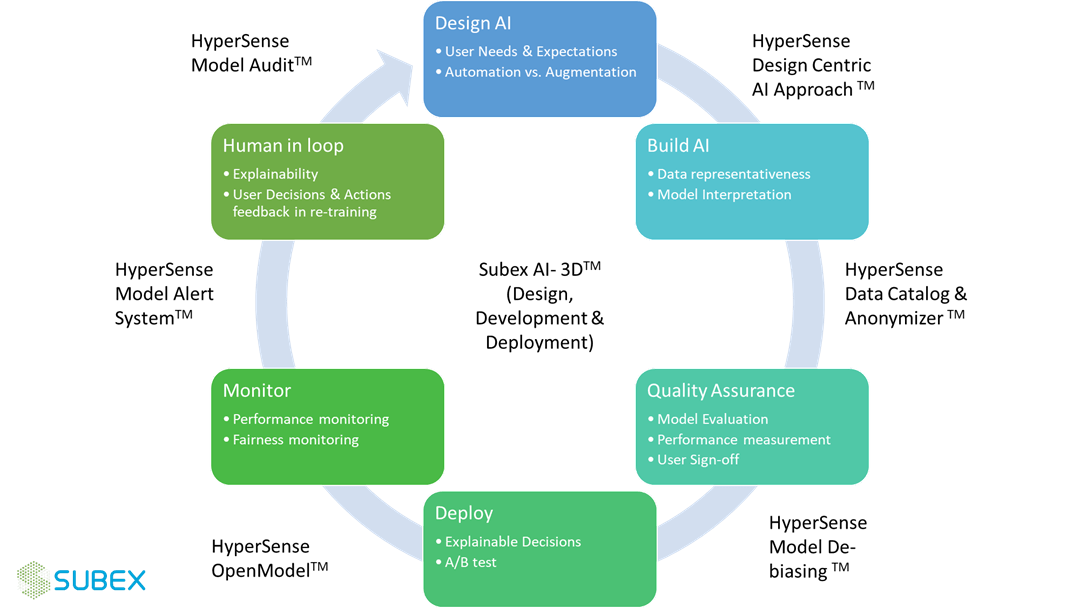

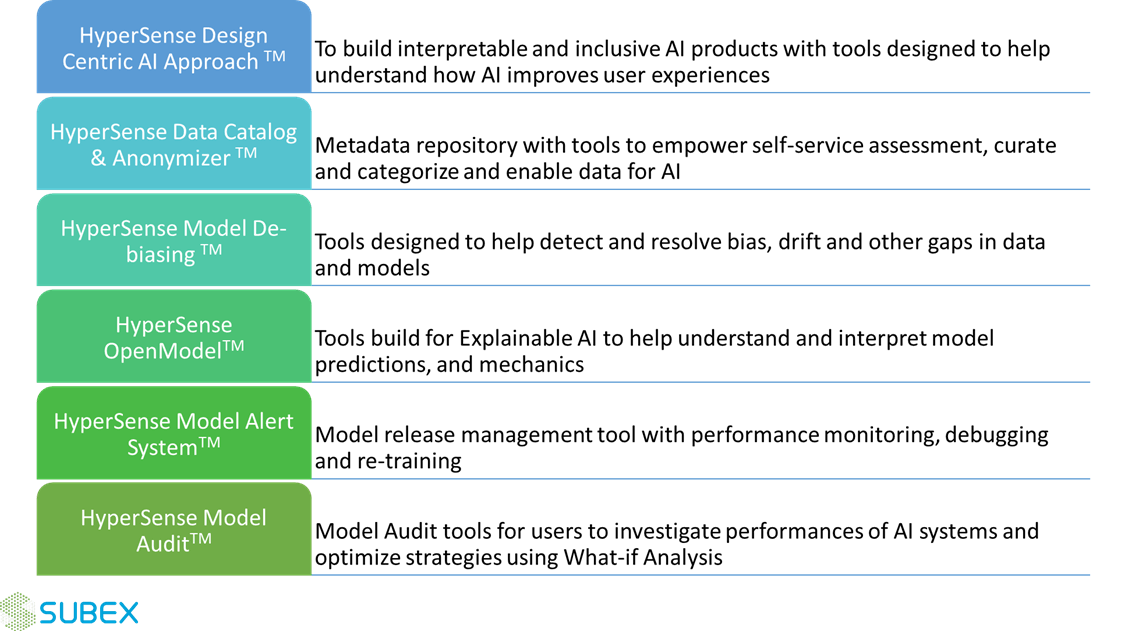

At Subex AI Labs, we apply the AI trust framework in design, develop and deploy ML models from inception to production. This AI trust framework guides us to follow our Digital Trust principles.

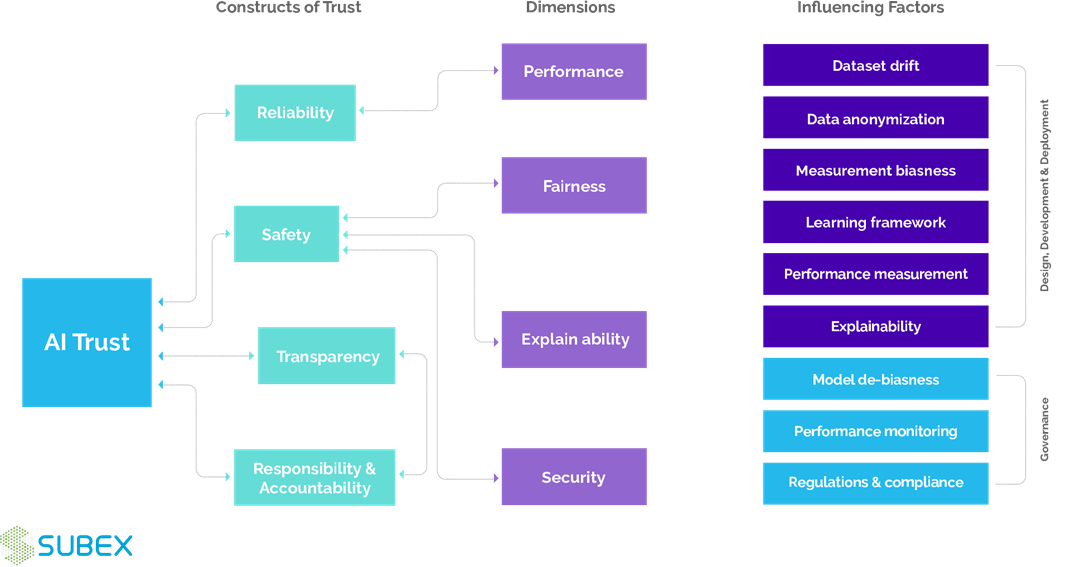

We look at AI Trust as a function of 4 key constructs which include Reliability, Safety, Transparency, Responsibility, and Accountability. These core constructs are pillars of driving AI trust in our products and solutions. Let me explain how to enable each core construct.

1. Reliability:AI products generally drives critical business decisions for our clients and underlying ML models which learn the “rules” from the data to drive various decisions comes with a certain level of uncertainty. For example, there will always be a small percentage of misclassification or prediction error driving incorrect decisions; unless it is clearly understood and guarded with human rules or conditional decisions. Having a clear understanding of this uncertainty requires one to first understand the scenarios and conditions in which the model response is accurately certain. This understanding provides a certain level of confidence in the model along with conditions in which model response may not stand its ground. When we train our AI models with our ‘User-centric approach’, it gives all necessary tools to ensure that the AI model built provides the required performance, explainability, and fairness, thereby making the models reliable.

2. Safety: It is a three-dimensional construct influenced by model fairness, explainability, and model security from malicious attacks and/or uncertain data behavior. When we design our AI products, we again apply our User-centric approach which enables us to ensure that the models built are fair for all target users whose experience is AI-optimized. When we develop these models on the data, we ensure that the models are transparent and performing well in various test scenarios. We use various XAI tools and frameworks which help us, and our client users to get the transparency we need to ensure that the models and business decisions based on the models are safe. At deployment of such models, we regularly perform ‘model de-biasing’ using our de-biasing tools to ensure that model stays safe during the entire lifecycle.

3. Transparency: Itenables toensure that the AI products are transparent throughout the lifecycle. It is important to involve the Human perspective in the design loop, the development, and deployment stage of AI products. Our 3D approach for Design, Develop and Deploy AI models enables us to keep Human decisions, situations, and context in mind while designing, developing, and deploying AI models for enhancing user experience.

Here is an overview of our frameworks based on which we have designed various tools with a human in-loop.

4. Responsibility and Accountability: We, at Subex, take full responsibility for how our AI products are built and work on user data. We provide full transparency in our AI products, clearly highlighting the short-term and the long-term benefits and impacts of AI within a defined boundary. We work with our clients to build an ethical framework for the business to ensure data privacy, model security and maximize user experience which improves the overall Digital Trust of our client’s products and services. We help our customers build ethical AI products that inspire customer trust.

At Subex, we take utmost care in the way our AI products are built and the way we work on user data. We provide full transparency in our AI products, clearly highlighting the benefits and the impact of AI within a defined boundary. We work with our clients to build an ethical framework for the business to ensure data privacy, model security, and maximize user experience, enabling our clients to build AI trust and digital trust.

Derive Maximum Value From Your AI Investments