Agility, Tools & Best Practices of ETL

What is ETL and why do we need it?

ETL is an advanced & mature way of doing data integration. We need to extract the data from heterogeneous sources & turn them into a unified format. In the subsequent steps, data is being cleaned & validated against a predefined set of rules.

ETL projects a considerable performance boost with the ability to stream input from arbitrarily large XML, CSV, and FLF files and relational databases, and stream output to equally large XML, CSV, and FLF files or insert it into a database.

ETL/ELT Process -Getting into few more Details

Extraction:

The first step of the ETL process is extraction. It is important to extract the data from various source systems and store it in the staging area first and not directly into the data warehouse. The extracted data is in multiple formats and can be corrupted.

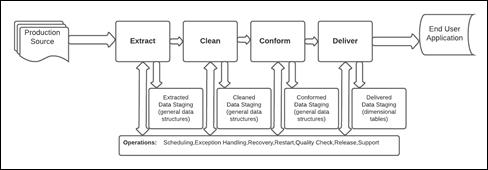

Fig: Four Steps of Staging Operation

Transformation:

The second step of the ETL process is transformation. In this step, a set of rules or functions are applied to the extracted data to convert it into a single standard format. It may involve the following processes/tasks:

Filtering – loading only specific attributes into the data warehouse.

Cleaning – filling up the NULL values with some default values, mapping USA, United States, and America into the USA.

Joining: Joining multiple attributes into one.

Splitting: Splitting a single attribute into multiple attributes.

Sorting: Sorting tuples based on some attributes (generally key attribute).

Loading:

The third and final step of the ETL process is loading. In this step, the transformed data is finally loaded into the data warehouse.

Need Agility in ETL Process & Tools to cope up In Current Business World:

Scenario:

With social media, IoT, and other Big Data drivers taking center stage, ETL has become imperative in consolidating transactional data in Hadoop (or equivalent) environments and transforming it into data warehouses that handle massive data scales. Posts on social media can contain videos, audios, images, maps, and other types. In addition, when integrating, data from social media (blogs, tweets, posts) is unstructured & it becomes a challenge to extract useful information. Business applications and connected devices produce continuous flows of data. ETL had to evolve from batch processing of predictable and well-structured data to handle semi-structured and nested data objects, such as JSON and XML, in micro-batches and in real-time.

- ETL has evolved to support schema detection, as well as the ability to handle schema changes automatically to some extent.

- When used with an enterprise data warehouse (data at rest), ETL provides a deep historical context for the business.

- By providing a consolidated view, ETL makes it easier for business users to analyze and report on data relevant to their initiatives.

- Organizations need both ETL and ELT to bring data together, maintain accuracy, and provide the auditing typically required for data warehousing, reporting, and analytics.

Following best practices would ensure a successful design and implementation of the ETL solution.

- Analyzing Source Data

- Validation

- Optimizing the ETL Solution

- Error Handling, Logging and Alerting

- Scheduling, Auditing & Monitoring ETL Jobs

- ETL Auditing

ETL Auditing in an extract, transform, and load process is intended to satisfy the following objectives:

- Check for data anomalies beyond simply checking for hard errors

- Capture and store an electronic trail of any material changes made to the data during transformation

What is the comparison criteria for ETL tools?

The ETL criteria underlying the categories have a direct relationship with the business and technical requirements for selecting ETL tools.

| Infrastructure | Functionality | Usability |

| Platforms supported | Debugging facilities | Data Quality /profiling |

| Performance | Future Prospects | Reusability |

| Scalability | Batch vs Real-time | Native connectivity |

Conclusion:

The quick takeaway from ETL Study is that consumer focus is based on the Agility of the ETL Tool/Process.

The main trade-off is the performance keeping in consideration the continuous streaming of data from disparate lines of sources.

Depending on the applicable Use Cases, ETL or ELT gets executed since both encompass Data transformation but in different Order.

However, to choose the best pick, it is recommended to select ETL Tool Specific to Business needs, Data Requirements & commercial aspect of ETL Technologies. es.

Want to create the right analytics strategy to transform your business.